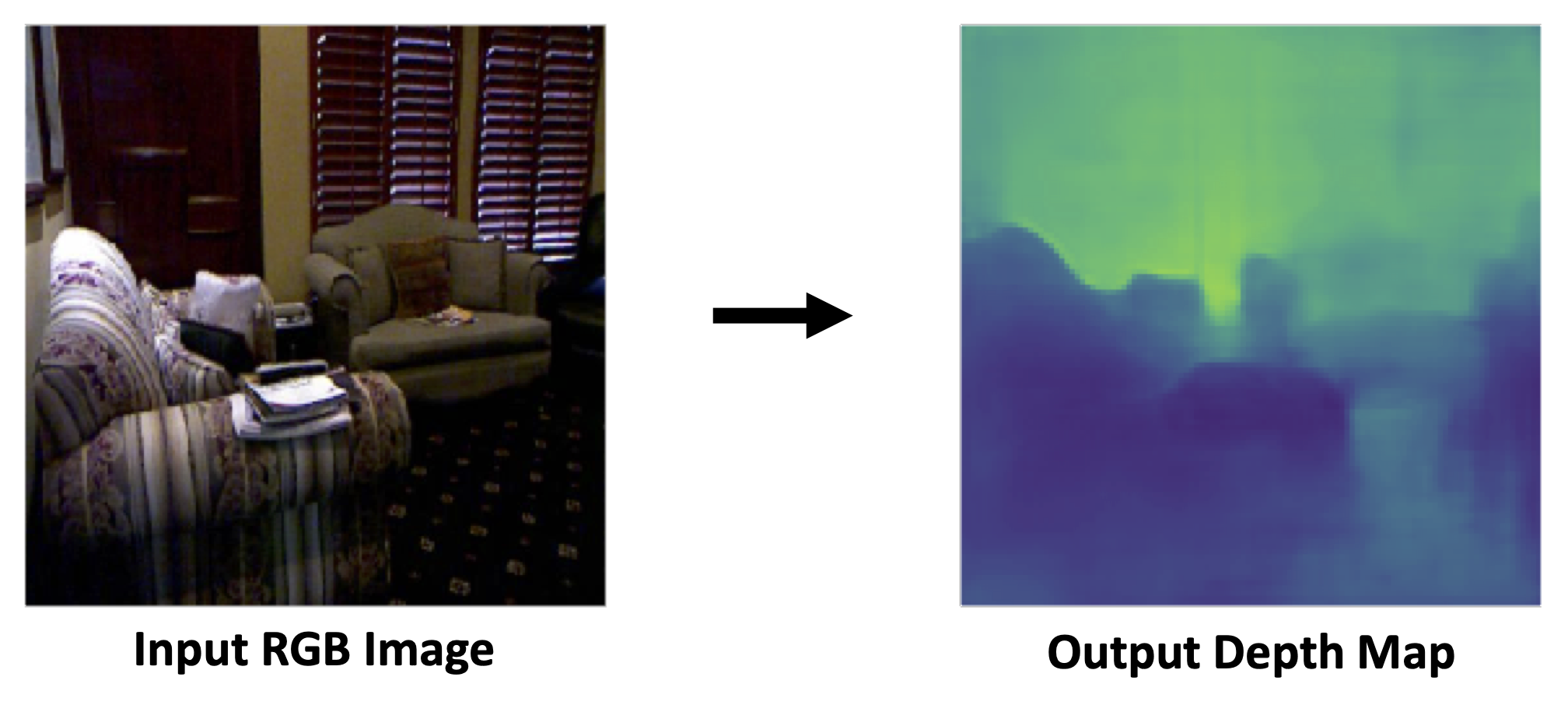

Depth sensing is critical for many robotic tasks such as localization, mapping and obstacle detection. There has been a growing interest in performing depth estimation from monocular RGB images, due to the relatively low cost and form factor of RGB cameras. However, state-of-the-art depth estimation algorithms are based on fairly large deep neural networks (DNNs) that have high computational complexity and energy consumption. This poses a significant challenge to performing real-time depth estimation on embedded platforms. Our work addresses this problem.

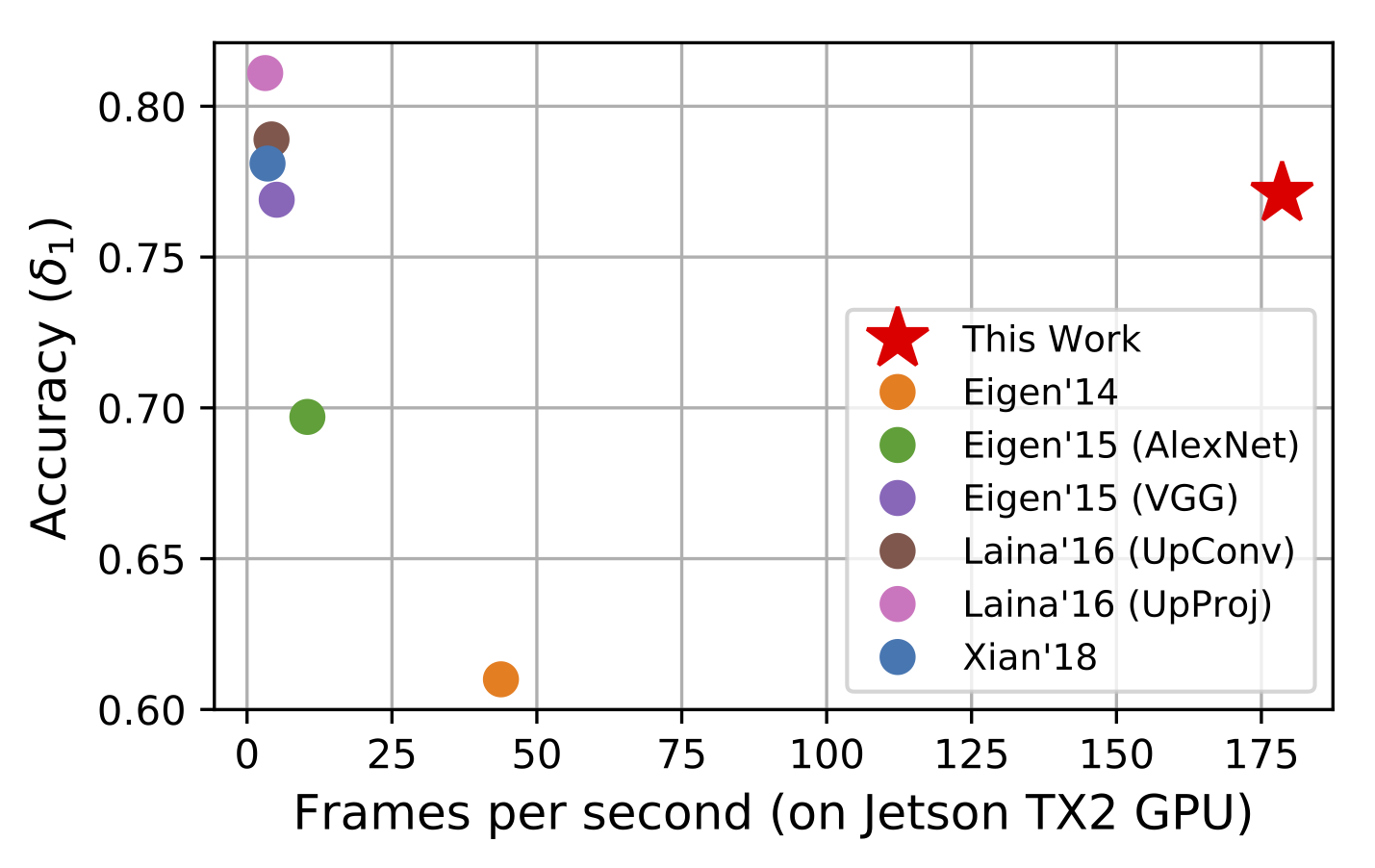

We first present FastDepth, an efficient low-latency encoder-decoder DNN comprised of depthwise separable layers and incorporating skip connections to sharpen depth output. After deployment steps including hardware-specific compilation and network pruning, FastDepth runs at 27-178 fps on the Jetson TX2 CPU/GPU, with total power consumption of 10-12 W. When compared with prior work, FastDepth achieves similar accuracy while running an order of magnitude faster.

We then aim to improve energy-efficiency by deploying FastDepth onto a low-power embedded FPGA. Using an algorithm-hardware co-design approach, we develop an accelerator in conjunction with modifying the FastDepth DNN to be more accelerator-friendly. Our accelerator natively runs depthwise separable layers using a reconfigurable compute core that exploits several types of compute parallelism and toggles between dataflows dedicated to depthwise and pointwise convolutions. We modify the FastDepth DNN by moving skip connections and decomposing larger convolutions in the decoder into smaller ones that better map onto our compute core. This enables a 21% reduction in data movement, while ensuring high spatial utilization of accelerator hardware. On the Ultra96 SoC, our accelerator runs FastDepth layers in 29 ms with a total system power consumption of 6.1 W. When compared to the TX2 CPU, the accelerator achieves 1.5-2 times improvement in energy-efficiency.